The European High-Performance Computing Joint Undertaking (EuroHPC JU) aims at developing a World Class Supercomputing Ecosystem in Europe and, with this goal, is procuring and deploying pre-exascale and petascale systems in Europe. These systems will be capable of running large and complex applications. In this sense, the demand from the application stakeholders includes not only aspects related to High-Performance Computing (HPC) but also artificial intelligence (AI) and data analytics.

The eFlows4HPC project has as objective to provide a software stack that makes easier the development of workflows that involve HPC, AI and data analytics components. The project aims to give support to dynamic workflows in the sense that the set of nodes in the graph of the workflow can change during its execution due to changes in the input data or context of the execution, and be reactive to events that may occur. The runtime systems supporting this execution should be able to perform efficient resource management, both in terms of time and energy.

Another objective of the project is to provide mechanisms to make the use and reuse of HPC easier by wider communities. For this purpose, the HPC Workflows as a Service (HPCWaaS) methodology has been proposed. The goal is to provide methodologies and tools that enable sharing and reuse of existing workflows, and that assist when adapting workflow templates to create new workflow instances.

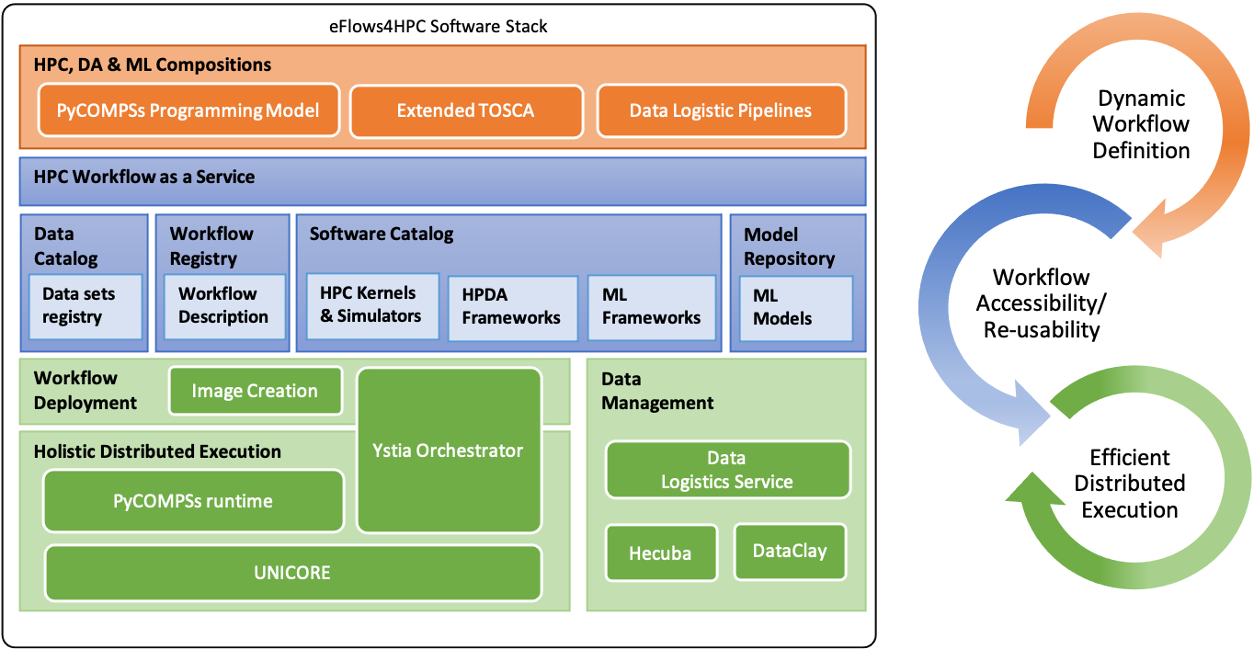

eFlows4HPC software stack.

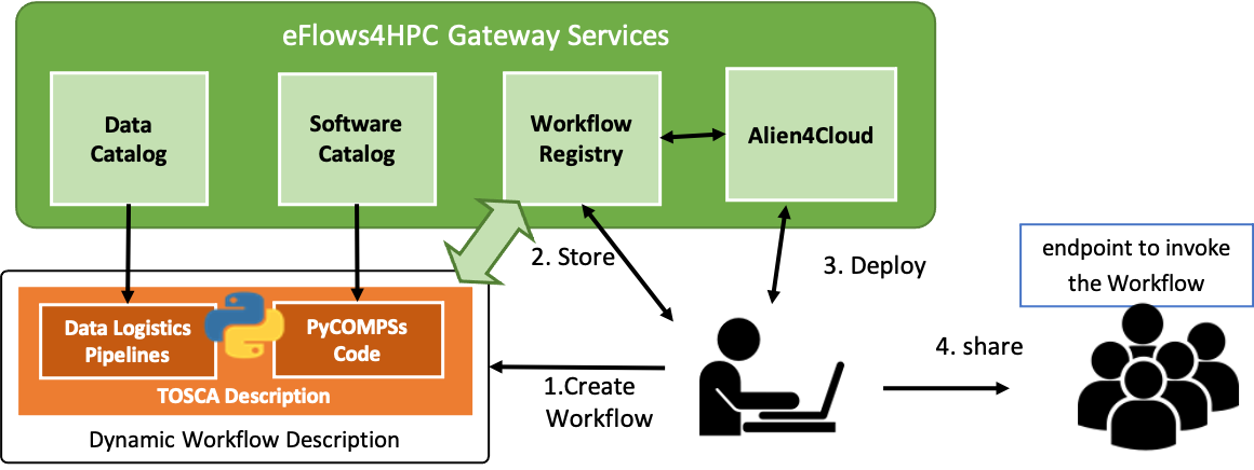

The eFlows4HPC software stack includes components to develop the workflows at three levels: high-level topology of the workflows using extended TOSCA, required data transfers with the Data Logistic Pipelines and the computational aspects of the workflow with PyCOMPSs. Once a workflow has been developed, it is registered in the Workflow Registry. Similarly, the different components of the workflows, pre-trained AI models and data sets are registered in a set of catalogues and repositories. All these registries and catalogues are used by the HPCWaaS interface, which provides a REST API to deploy and execute the workflows. On execution, the stack also provides a set of runtime libraries to support the workflow execution and data management.

HPCWaaS methodology.

The eFlows4HPC developments are demonstrated in the project through three pillar applications in the areas of digital twins for manufacturing, climate modeling and prediction and urgent computing for natural hazards. Pillar I deals with the construction of digital twins for the prototyping of complex manufactured objects integrating state-of-the-art adaptive solvers with machine learning and data-mining, contributing to the Industry 4.0 vision. Pillar II develops innovative adaptive workflows for climate and for the study of tropical cyclones in the context of the CMIP6 experiment, including in-situ analytics. Pillar III explores the modelling of natural catastrophes – in particular, earthquakes and their associated tsunamis shortly after such an event is recorded.

Coordinated by the Barcelona Supercomputing Center (BSC), the eFlows4HPC consortium comprises 16 partners from seven different countries with expertise in technical aspects: supercomputing and acceleration, workflow management and orchestration, machine learning, big data analytics, data management, and storage; together with expertise in the pillar workflows’ areas: manufacturing, climate, urgent computing.

The initial results of the project are available under the project website in its deliverable section (see https://eflows4hpc.eu/deliverables/). The source code and documentation are publicly available as well (see https://github.com/eflows4hpc and https://eflows4hpc.readthedocs.io/en/latest/).

Rosa M. Badia (Barcelona Supercomputing Center)

Rosa M. Badia (Barcelona Supercomputing Center)